How We Built AlexAI: The World's Top AI Energy and Climate Expert

Also, how AI can help make sense of complex and contentious debates by generating clear explanations.

Hey! I’m Tom Walczak, a serial entrepreneur building AI products. I'm interested in computer science, philosophy, and how AI tools can improve our knowledge system. In this post, I explain how we built an expert AI chatbot dealing with a complex and contentious subject - energy and climate. I will share what we’ve learned, detailed prompts, and what’s next for, and what’s next for AlexAI.

Feel free to forward it to others if you find it interesting!

Over the past few years, I've been working on AI tools that help us make sense of complex, contentious, and consequential issues. I believe that better tools will help us think about difficult subjects more clearly, leading to better decisions and faster progress in the real world.

While we're not yet at the point when we can build an end-to-end knowledge system using AI, we can focus on what the current AI does best—taking existing high-quality sources and generating clear explanations.

Making Sense of Climate Change and Energy With AlexAI

The debate around climate change and energy is one of those complex and contentious issues.

Building an AI climate and energy expert requires an in-depth understanding beyond climate science. To get the full picture, we must include energy economics, engineering, the history of energy development, law and policy, and (often overlooked) philosophy and ethics.

Alex Epstein’s work is uniquely suited for such an AI expert. Alex is my favorite thinker on climate and energy because of the way he integrates all these relevant fields and frames the debate in a positive, human-centered way.

AI can make the best knowledge more accessible

Earlier this year, Alex and I decided to build AlexAI: the world's best AI energy and climate expert.

AlexAI makes the complexities of energy and climate sciences more accessible and understandable, using the latest advances in AI.

We're working toward making AlexAI capable of answering questions as Alex would. Over time, AlexAI will have perfect recall from a vast knowledge base of primary data sources and high-quality explanations.

Implementing a robust AI knowledge system

A well-designed AI can enable experts, who have produced valuable knowledge, to argue persuasively for their views. This will benefit the entire knowledge system and every one of us in a tangible way.

Even those on the opposing side of a debate gain an advantage; they can readily engage with the most robust version of their opponent's arguments—aka "steelman"—and test their positions against it. Depending on the outcome, this will either help them get more confidence in their views, refine them, or plant a seed of doubt and inspire further research.

Achieving this vision is challenging, given the limitations of existing large language models (LLMs). These models often hold very conventional views and are prone to generating hallucinated or irrelevant answers.

A well-designed AI can enable experts, who have developed the best knowledge in a given field, to argue persuasively for their views.

Building the AlexAI Chatbot

AlexAI required access to all of Alex's work to function at its best, including his book, Fossil Future, blog posts, lectures, Q&A sessions, and congressional testimonies. We refer to this comprehensive collection of Alex's work as AlexAI’s Knowledge Base.

There are two ways to create an AI expert chatbot:

Training or fine-tuning a custom AI model on the knowledge base.

Using a dynamic prompting strategy, technically known as Retrieval Augmented Generation (RAG).

The problem with a custom AI model

We opted against a custom model for AlexAI. Though conventional wisdom often recommends taking a pre-existing, generally intelligent model and further training it on a specific knowledge base, we found this approach ineffective.

Parrot-like behavior: such a model often becomes parrot-like, simply regurgitating the information out-of-context and losing some of its general intelligence.

Lack of control over output: controlling the output of a fine-tuned model is also challenging; its generated responses are inherently unpredictable and, when they don’t live up to our expectations, cannot be easily corrected without full retraining of the model.

More severe hallucinations: fine-tuned models are especially prone to hallucination, generating nonsensical or irrelevant outputs. All that being said, a fine-tuned model can effectively approximate Alex's tone of voice, and we may consider employing it for that specific purpose in the future.

Dynamic prompting

We used dynamic prompting with multiple large language models (LLMs) to program AlexAI. This method involves constructing a prompt with relevant contextual information, which is then fed into an LLM (LLaMA, GPT-4 or similar)

For example, let us take the following question:

"Don't renewable energy sources create more jobs than fossil fuels?"

At the start of the prompt, which we feed into an LLM, we establish a set of principles and the general worldview that embodies Alex's perspective.

Most importantly, it outlines how he conceptualizes problems and frames the primary issues. The original idea for this approach came from the Constitutional AI paper and has worked well for AlexAI.

We also have set guidelines for AlexAI's tone of voice.

Building the AlexAI Knowledge Base

A limitation of using dynamic prompts is that we cannot include all of Alex's work in a single prompt due to context length restrictions. With current LLM limitations, it's impossible to have several thousand pages worth of context inside one prompt.

Even with unlimited content length, we still need to give the LLM highly relevant context because in order to produce a high-quality response, it may need to recall one sentence from an entire chapter of a book. The longer the prompt, the harder it is for the LLM to answer accurately.

Retrieving sources through semantic similarity

One critical component of our AI system is the method of retrieving sources. The challenge lies in determining which source aligns with a particular user question in the context of the conversation and should thus be retrieved and incorporated into the prompt.

Adding high-quality context means the chatbot doesn't have to "think" too much. Our method primarily relies on similarity, and we extract snippets instead of entire book chapters or full articles.

This approach works surprisingly well for AlexAI because Alex's writing is rich with relevant concepts, clear arguments, and explanations. Compared with other AI apps, this has given us an "unfair advantage," and it's why we've been able to use a relatively simple, similarity-based knowledge base index.

We have taken extra steps to combat this method's limitations:

Reducing duplicate content

Hybrid strategy of combining semantic search with traditional natural language processing (NLP) techniques to increase specificity. This issue remains an unsolved challenge in AI.

“Framing notes”: we designed "framing notes" to enhance AlexAI's knowledge base. These notes outline specific principles concerning particular topics, especially those not explicitly mentioned in Alex's work. For instance, “What would be a rational foreign policy towards Saudi Arabia given that they are a major oil exporter?” We use AI (in fact, we use AlexAI itself with a dedicated prompt) to generate these additional notes, and they are subsequently reviewed and refined by the "real Alex".

What if the knowledge base doesn’t have the answer?

We've carefully programmed AlexAI to follow Alex's principles while doing its best to provide a helpful answer.

Even though Alex has written extensively on energy and climate, his work alone can't directly address the thousands of potential questions users might ask AlexAI—for example, "Are SMR rectors key to unlocking nuclear power?" or "What breakthroughs are needed for scalable direct air capture?"

If Alex’s work doesn't contain the answer, we need the LLM to fall back on the knowledge it acquired during its original training (i.e. having been trained on the entire Internet). This fallback is tricky to get right - we want AlexAI to answer any energy or climate question but we don’t want it to contradict Alex’s own views or produce outright false information.

It's a balancing act, and we put considerable effort into prompt engineering, testing, and iterating.

Similarly, we don’t want AlexAI to answer questions unrelated to energy or climate, especially making up answers to political or personal questions. At the same time, we do want to make it feel like you’re talking to a person, and some seemingly off-topic questions are interesting:

“Thinking out loud” to answer tricky questions

Before generating a response to the user’s question, we run an additional, dedicated prompt to evaluate the user's message to determine the optimal reply based on the conversation history and any relevant sources identified. Essentially, we encourage the model to first "think out loud” before answering.

This "thinking out loud" step helps with several scenarios, for example:

Complex user questions

Incorrect user assumptions

Poorly framed or confusing questions

In these scenarios, AlexAI will prompt the user for clarity to provide a high-quality, relevant answer.

Therefore, it is helpful for the LLM to pause and consider the best approach to the user's question. Multiple research papers found this method to be more effective compared with generating an "off-the-cuff" answer.

This "mental processing" is similar to how you and I might answer a challenging question; we pause for a few seconds instead of rushing to respond. (On a side note, this is the main mechanism that drives AI agents).

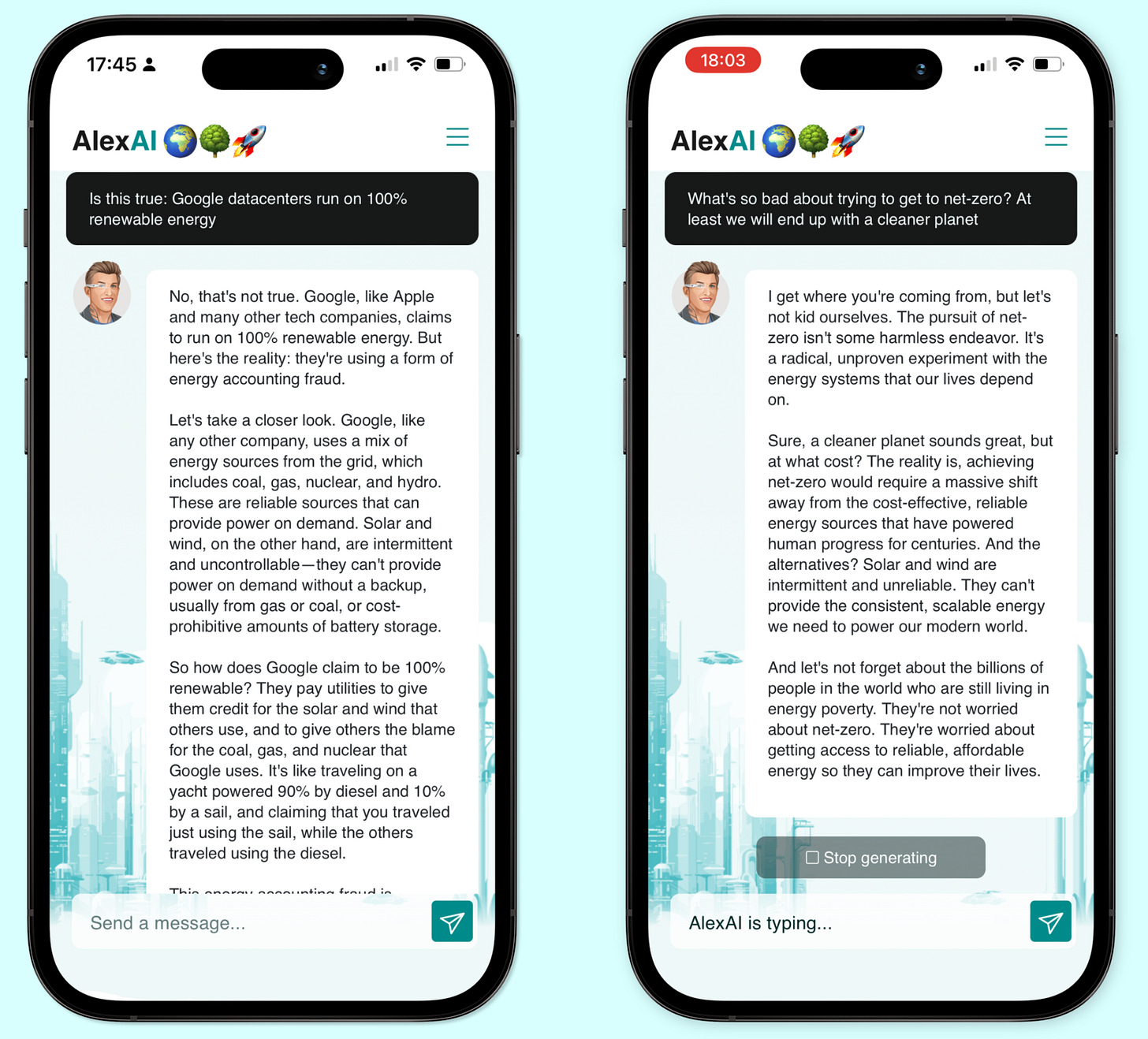

How we made chatting with AlexAI fun

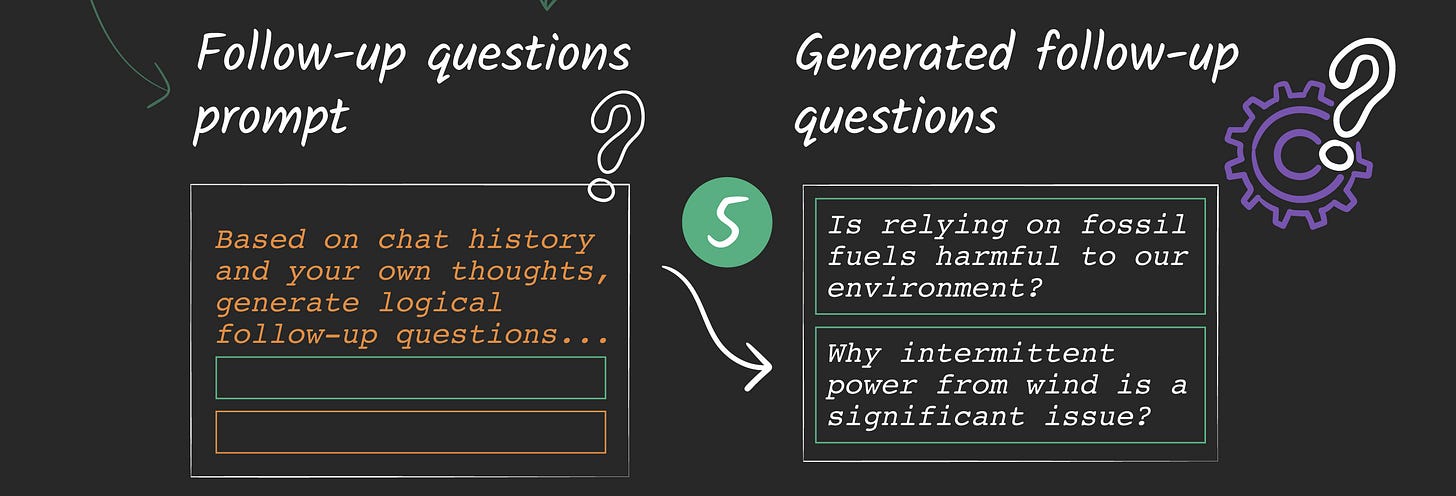

We want to make chatting with AlexAI enjoyable and to spark curiosity. So, we designed AlexAI to automatically generate relevant follow-up questions based on the chat history.

These follow-up questions are designed to be logical and sometimes deliberately offer a challenge to AlexAI. This shows that AlexAI can think ahead and consider different points of view. Ultimately, the goal is to simulate a lively conversation with Alex.

Thanks to a comprehensive knowledge base, AlexAI can access clearly stated arguments and explanations on all sides of the debate and use them to generate thoughtful follow-up questions. This conversational approach makes AlexAI more engaging while demonstrating that it can tackle complex and challenging questions.

How we improve AlexAI's answers over time

We check AlexAI's response quality regularly and make incremental improvements, but this isn't as simple as it sounds. We often find that when we make improvements in one area, an issue appears somewhere else.

We have discovered two primary influencing factors:

AI is non-deterministic

It usually takes multiple iterations to find a set of instructions that work across a variety of interactions

To address this problem, we've designed a method to stress-test AlexAI's performance before each release. We ask AlexAI complex, vague and poorly framed questions to challenge AlexAI's systems end-to-end.

We check the answers both manually and automatically (using another LLM)

Taking AlexAI to the next level

We've had fun building AlexAI so far and look forward to some major improvements next year. Here is a sneak peek at what we have planned for 2024.

Adding links, visuals, graphs, and primary data sources

Currently, users tap the "Sources" button to view AlexAI's source for its response. This feature displays raw text excerpts, but we will evolve this into a more refined format with rich visuals, charts and references to primary data sources. This will give AlexAI a much better look into what’s behind each answer.

We are also working on a more powerful indexing and retrieval for the knowledge base. Sometimes, AlexAI fails to retrieve the correct sources, especially if the user wants to address a very niche topic. As a result, the generated answer can be vague and unsatisfying.

Chatting to AlexAI about the news

AlexAI is currently being used by politicians and their staffers, as a turbocharged version of energytalkingpoints.com.

As a result, updating AlexAI with latest events as they happen will make it even more useful. Right now, it’s only aware of the world events that happened a few months ago, unless Alex specifically addressed an event in a recent blog post.

Soon, you will be able to ask “Alex, what do you make of [insert the latest climate or energy-related event].”

Using AlexAI to fact-check

We're adding the ability to fact-check articles, tweets, and even videos. We will also make it easy for energy content creators and influencers to use AlexAI to challenge and counteract bad ideas while promoting better ones.

We are starting with adding an easy way to draft Community Notes on X.com, backed up with reliable evidence and clear explanations.

Next-gen AI models

We are enabling AlexAI to "see and hear" By implementing voice support, text-to-speech (in Alex's voice), and the ability to attach images, data charts, and references in generated responses.

Bridging the context gap between user and AI

AlexAI must grasp how the user thinks about the essential issues and how they're framing them. To put it simply, AlexAI needs to be aware, at all times, of where the user is coming from and how to bridge the gap.

AlexAI already does this well, mainly due to the evaluation step. We want to add a structured medium- and long-term memory and update it as the chat progresses.

Why you should try out AlexAI

Many people are expecting to disagree with Alex on issues related to climate and energy. If you fall into that category, I encourage you to try AlexAI—see if the chatbot can answer your tough questions 👉 free trial on iOS!

With AlexAI, you're getting the best version of the pro-development and anti-alarmist perspective on climate change and energy, short of talking to Alex himself.

Testing your knowledge against AlexAI will help refine your understanding and the best arguments on the other side of the debate.

If you found this post interesting, forward it to others and you can follow me on X @tom_walchak.

Follow me on X @tom_walchak.

Great work. Curious if you are interested in doing a similar AI tool for technologist George Gilder. He has 20 books.